Before the Storm: How Supercomputers Can Provide Better Weather and Tornado Forecasts

Complete the form below to unlock access to ALL audio articles.

A significant volume of deaths and property loss is associated with severe thunderstorms and related phenomena including tornadoes and hail, and other natural disasters like hurricanes, droughts, and flash floods. The advanced warning processes of tornadic thunderstorms have the shortest time span compared with other weather hazards, usually from minutes to hours. The current average warning lead time of tornadoes is less than 20 minutes. An extension of warning lead time as small as one minute could make a considerable difference in events like tornadoes.

Researchers now have a new tool to aid in weather and tornado forecasting. In 2016, the National Oceanic and Atmospheric Administration (NOAA) launched the first of the newest-generation geostationary weather satellites, GOES-16, which is designed to send high-resolution, high-frequency and high-accuracy images from its Advanced Baseline Imager (ABI) instrument. GOES-16 provides a full disk image of the Earth every 15 minutes, and one focusing on the continental U.S. every five minutes at the same time.

A team of Pennsylvania State University researchers are the first to directly use the satellite radiance data from the GOES-16 satellite in numerical models designed to forecast tornadic thunderstorms, which cause 17 percent of all severe weather deaths in the United States. The team, led by Dr. Fuqing Zhang, Distinguished Professor of Pennsylvania State University, and Director of the Penn State Center for Advanced Data Assimilation and Predictability Techniques, perform research that has the potential to provide earlier warnings about tornadoes to help save lives and improve safety during extreme weather events. “There is a huge amount of data that is generated during our tornadic thunderstorm research. A single forecast can contain up to tens of terabytes of data that is either ingested from the satellite or generated by the numerical model. Our work requires using a supercomputer to perform the simulations, and to analyze and archive the data,” states Zhang.

Using satellites for weather prediction

The Penn State team is at the international forefront in predictions and modeling of severe weather and hurricanes. In a chance encounter on an airplane about a decade ago, a high-ranking officer at NOAA sat next to a high-ranking officer from the National Science Foundation (NSF). This encounter facilitated the early computing support of NOAA’s Hurricane Forecast Improvement Program (HFIP) that tremendously benefited Zhang’s exploratory hurricane research.

Zhang put forward his team’s work and indicated that they needed supercomputers to be able to run their research simulations. As a result, Penn State has so far been awarded time on various supercomputers, in particular at the Texas Advanced Computing Center (TACC), which has and will continue to benefit the nation with state-of-the-art weather simulations and improved future warnings.

The most significant advantage of geostationary satellites over other observation platforms is that they can continuously provide observations of a vast region without any gaps. For example, when thunderstorms and hurricanes occur, GOES-16 can image them as frequently as every minute. According to Zhang, “With the 16 bands and the advantage of the newest-generation geostationary satellites, we can extract valuable information that could benefit not only weather prediction but also hydrology, forestry, aviation, transportation, and fire-weather warning.”

Penn State research methodology

Zhang's team use observations from both the geostationary satellite (GOES-16) and the ground-based Doppler weather radars. A complex radiative transfer model is used to link what is obtained from the numerical model and what is seen from the satellite—these are combined using an ensemble-based data assimilation technique. Ensemble forecasting performs multiple simulations (predictions) of future weather with numerical models. It is currently used by forecasters as the primary tool for probabilistic weather forecasting.

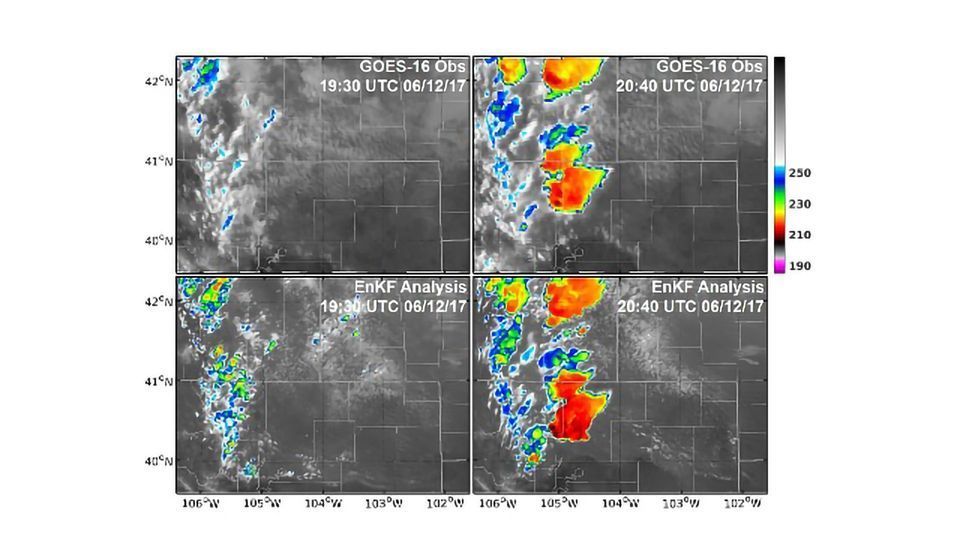

The simulations are initialized with 40 realizations of short-term ensemble forecasts that differ somewhat in their initial conditions to represent uncertainties of the atmospheric states. After running this ensemble forecast to let clouds and other features develop, observations from GOES-16 are assimilated every five minutes and continue for a total length of 100 minutes. The free-running ensemble forecasts are carried out every 20 minutes, to examine how the thunderstorm predictions changed. The predictions are verified against radar observations using rotations at mid-troposphere, which is one of the characteristics of severe thunderstorms. An example of a weather forecast image is shown in the image below.

Supercomputers power tornado and weather forecast research

The team performs most of their simulations on TACC’s Stampede2 supercomputer that is the most powerful supercomputer in the U.S. for open science research. Stampede2 is powered by Intel Xeon Scalable processors with interconnection provided by high-performance Intel Omni-Path Architecture. Zhang indicates that the team hopes to continue their research on TACC’s future supercomputer, named Frontera, which will be based on 2nd Generation Intel Xeon Scalable processors. In addition to running simulations at TACC, Zhang reports that TACC also supplies great support in areas of optimizing code and answering technical questions.

Software and models

Zhang’s Penn State research team use high-resolution numerical weather prediction models combined with newly-deployed remote-sensing platforms to improve their predictions. The numerical weather prediction model is called the Weather Research and Forecasting (WRF) model, an open-source software framework developed primarily at the National Center for Atmospheric Research (NCAR). This WRF-based cloud-allowing ensemble data assimilation and prediction system is developed and maintained by Zhang's team, whilst funding is provided by the National Science Foundation, Office of Naval Research, NOAA, and NASA.

The WRF model predicts how atmospheric temperature, moisture and winds will evolve given a certain initial state, based on physical laws of dynamics and thermodynamics. Both the WRF model and the Penn State ensemble data assimilation software are free and can be used by other researchers. WRF is one of the most popular models in both research and operational communities of meteorology, and it has been widely used in aiding predictions and warnings of severe weathers including tornadoes by multiple governmental agencies such as NOAA’s Storm Prediction Center. According to Zhang, “The ensemble data assimilation system used in this study was entirely developed by our research group. It includes several unique schemes that we found could improve the usefulness of all-sky satellite radiance observations such as those from GOES-16.”

The team uses Matlab, Python and graphic software for visualization, and MPI for parallelization in order to ingest large numbers of observations (typically about tens of thousands every time) simultaneously in a timely manner. “We have been using MPI for parallelization of the data assimilation system. With that, we can horizontally divide the model grid into smaller tiles and each tile can process observations simultaneously. The numerical weather prediction model (WRF model) also used horizontal decomposition for parallelization. We have examined the scaling of our data assimilation system using 4 to 1024 processors and it shows very good scaling. This is expected since the most computationally intensive calculations are parallelized by horizontal decomposition. The WRF model is also the same.”

“Since the WRF model has very good scaling, the wall-clock times needed for one WRF-model simulation to finish are almost inversely proportional to the processors that we used. The massive CPUs provided by supercomputers saved a significant amount of time waiting for the results,” states Zhang.

Challenges for future research

“To better predict weather events in the future, our research team needs better computer software and storage. In addition, we need to resolve issues with input/output bottlenecks in current computer systems which slow down research as well as the sub-optimum parallelization of the ensemble data assimilation system we developed. Advancement in parallelization in areas such as quantum computers, and expert support in more efficient parallelization for data assimilation programs may hold promise for our future research,” concludes Zhang.

Linda Barney is the founder and owner of Barney and Associates, a technical/marketing writing, training and web design firm in Beaverton, OR.

This article was produced as part of Intel’s HPC editorial program, with the goal of highlighting cutting-edge science, research and innovation driven by the HPC community through advanced technology. The publisher of the content has final editing rights and determines what articles are published.